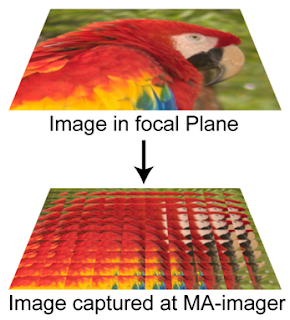

So the guys at Stanford have come up with a CMOS sensor that takes an array of images and distills it into a deep pixel format image. Then you can extract multiple 3D depth images, refocus, recompose the final image. This would be an ultimate raw camera image. Only caveat is its sub resolution. If the sensor it 10 mega pixels, then a 10×10 array subdivides the resolution into 100 sub pictures. Not quite ready for prime time but this is very promising. Check the link for more detail.